This post was written by Michelle Lochner.

A few months ago, Google made headlines when they released images of “dreams” from their image recognition neural network. In a process they called “Deep Dreaming”, the neural network could turn any normal image into a fantastical dreamscape, all generated from things the network has seen while training. I decided to experiment with Google’s publicly available deep dreaming tools and asked the question, what does the CMB look like when the network dreams about it? This post below is reproduced from my research blog, http://doc-loc.blogspot.co.uk/.

You may have heard about Google’s Deep Dreaming network, turning completely normal pictures of clouds into surreal cityscapes.

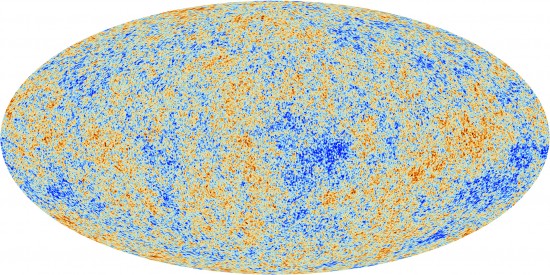

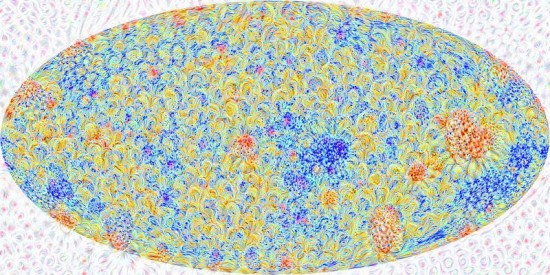

I was curious about what would happen if I asked the network to dream about the cosmic microwave background (CMB), the baby photo of the Universe. The CMB is the background radiation left over by the Big Bang and is one of the most important probes of the Universe that we have. As a pattern, it looks pretty close to noise so I had no way of knowing what might come out of Google’s dreams.

What is Deep Dreaming? Well this all came from the desire for search-engine giant Google to solve the image-recognition problem. This is a good example of something that humans do very easily at just a few weeks of age, but is notoriously difficult to get a computer to do. However, Google does seem to be cracking the problem with their deep learning algorithms. They’re using convolutional neural networks, which are neural networks with many layers. So it seems Google has had the most success with a machine learning algorithm that tries to imitate the human brain.

Deep Dreaming is what I suspect the people at Google came up with while they were trying to understand exactly what was going on inside their algorithms (machine learning can be a dark art). The original blog post is excellent so I won’t repeat it here but will rather show you what I got when playing around with their code.

You can actually submit any image you like here and get a ‘inceptionised’ image out with default settings, but I wasn’t happy with the result (the default settings seem optimised to pick up eyes) so I decided to dig deeper. For the technically inclined, it’s not that hard to run your own network and change the settings to get the strange images in Google’s gallery. Everything you need is on the publicly available github repository, including a great ipython notebook for doing this yourself.

I took a (relatively low-resolution) image of the CMB from the Planck satellite to test the deep dreaming methods on. Basically all of the DeepDream images are produced by picking something and then telling the neural net to try and make the input image as close to that thing as possible. It’s the something you pick that will change the images you get out.

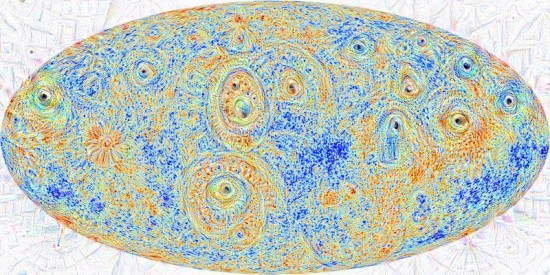

Google’s trained convolutional neural network is over a hundred layers deep, each layer corresponding to some particular things the network has seen in the training set. When running the network on an image, you can pick out one of the layers and enhance it.

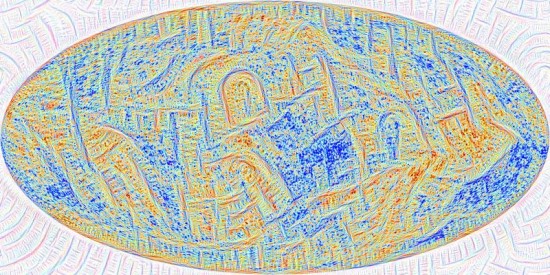

Picking out higher level layers tends to bring out smaller, more angular features whereas lower levels tend to be more impressionistic, smoother features.

Then you can also ask the network to try and find something particular within your image. To the technically inclined, this is equivalent to placing a strong prior on the network so that it optimises for a particular image. In this case, I asked it to find flowers in the CMB.

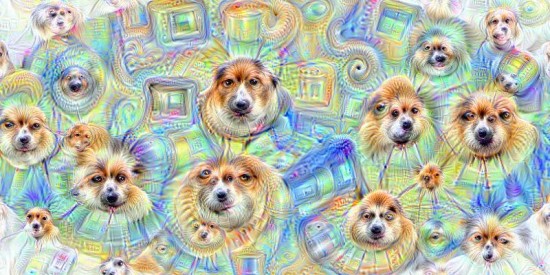

Now comes the fun stuff. You ask the network to generate an image as before, focusing on a specific layer. Then you take the output image, perform some small transform (like zooming in a little) and run it again. This is Google’s dreaming. You can start with almost any image you like. Playing around I eventually found “the dog layer” where it continuously found dogs in the sky.

I made a video of a few hundred iterations of this (note: I lengthened the first few frames otherwise they go by way too fast). I find it really appropriate that after a while, it starts finding what really looks like a bunch of microwaves, in the cosmic microwave background radiation… Enjoy the show!